Project airflow

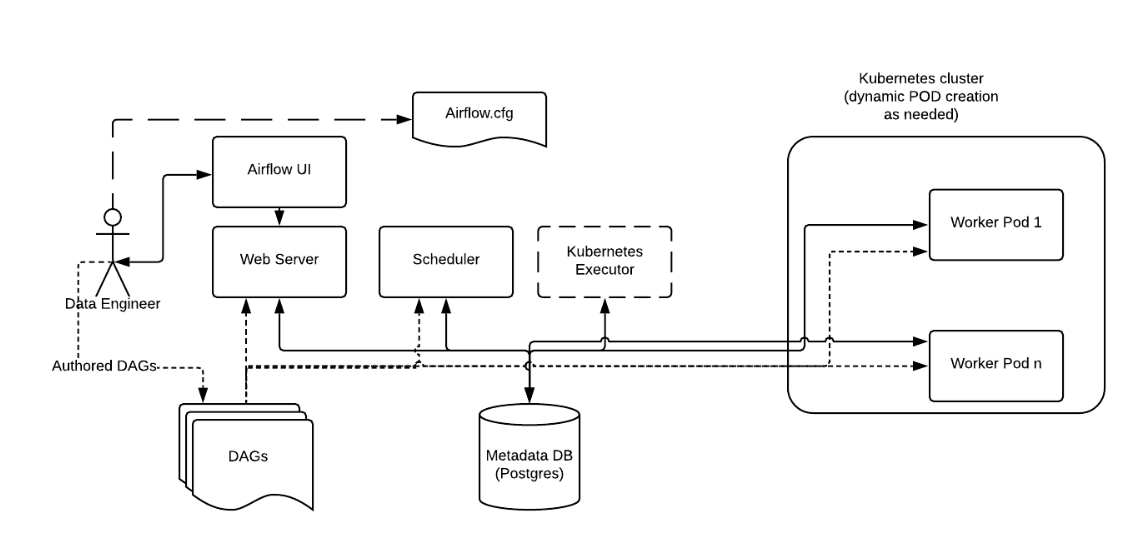

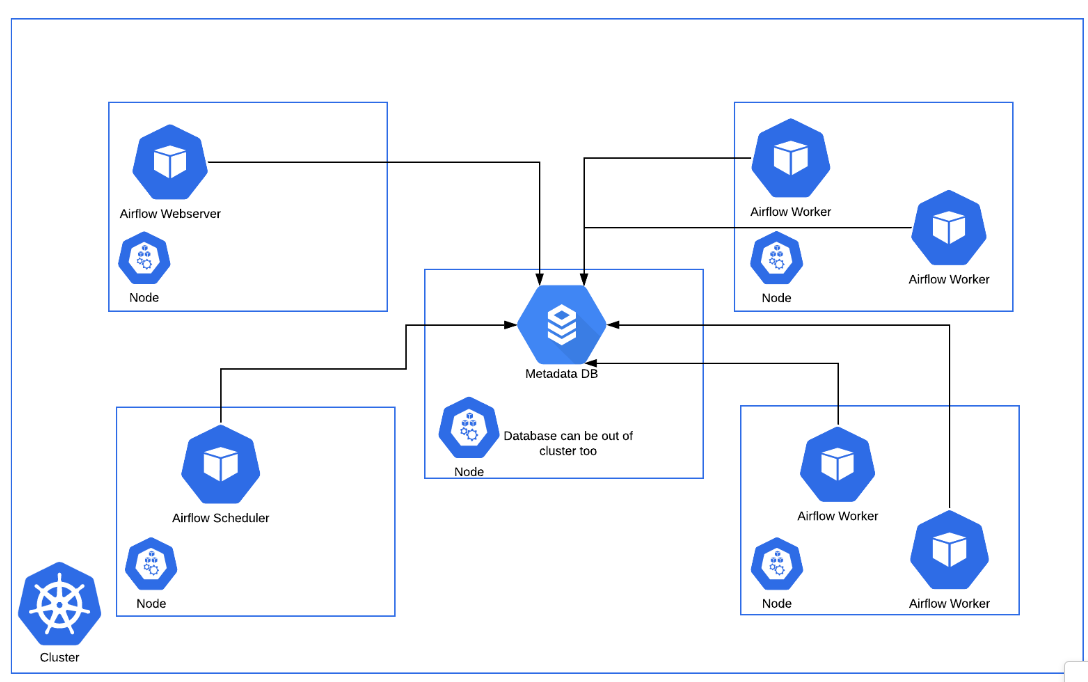

Kiến trúc hệ thống Airflow:

Link github: https://github.com/eduhub123/ETL_Data

Link present: https://docs.google.com/presentation/d/1YkFHazP5aX1QV3YO5wkiSlJtfCb1v4uB/edit#slide=id.g27fa31ae64b_0_17

Cấu trúc project:

Project Structure

The folder structure for the Data Pipeline Framework can be organized as follows:

ETL_DATA/

│

├── dags/

│ ├── etl_pipeline_example.py

│ ├── etl_pipeline_example2.py

│ └── ...

│

├── operators/

│ ├── __init__.py

│ ├── data_extractor.py

│ ├── data_transformer.py

│ └── data_loader.py

│

├── utils/

│ ├── __init__.py

│ ├── aws.py

│ └── utils.py

├── tests/

│ ├── __init__.py

│ ├── operator_tests.py

│ └── pipeline1_tests.py

├── requirements.txt

├── .env

├── .airflowignore

├── docker_file

├── docker-compose.yaml

└── README.md

Folder Structure Explanation:

dags/ folder - Contains the main DAG files that define the ETL workflows.

operators/ folder - Contains the custom Airflow operators specific to the ETL pipeline framework:

-

data_extractor.py: Implementation of the DataExtractorOperator that fetches data from an API and uploads it to an S3 bucket. -

data_transformer.py: Implementation of the DataTransformerOperator that takes care of data cleansing, validation, and transformation tasks. -

data_loader.py: Implementation of the RedshiftUpsertOperator that loads data into Redshift, and s3 staging tables and upserts the data into the appropriate target tables.

utils/ folder - Contains utility functions to be used across the framework, e.g., interacting with Redshift.

tests/ folder - Contains all the test files for custom operators, hooks, and utility functions.

docker/ folder - Contains all the dockerfiles and docker-compose.yaml for deploy and run pipeline

requirements.txt - A file that lists all the dependencies needed for the execution environment

Các datapipeline hiện có:

- Pipeline Event clevertap:

- Pipeline Airbridge

- Pipeline